Why 8GB of VRAM is Exactly Enough to Own Your Future : The Sovereign AI Manifesto

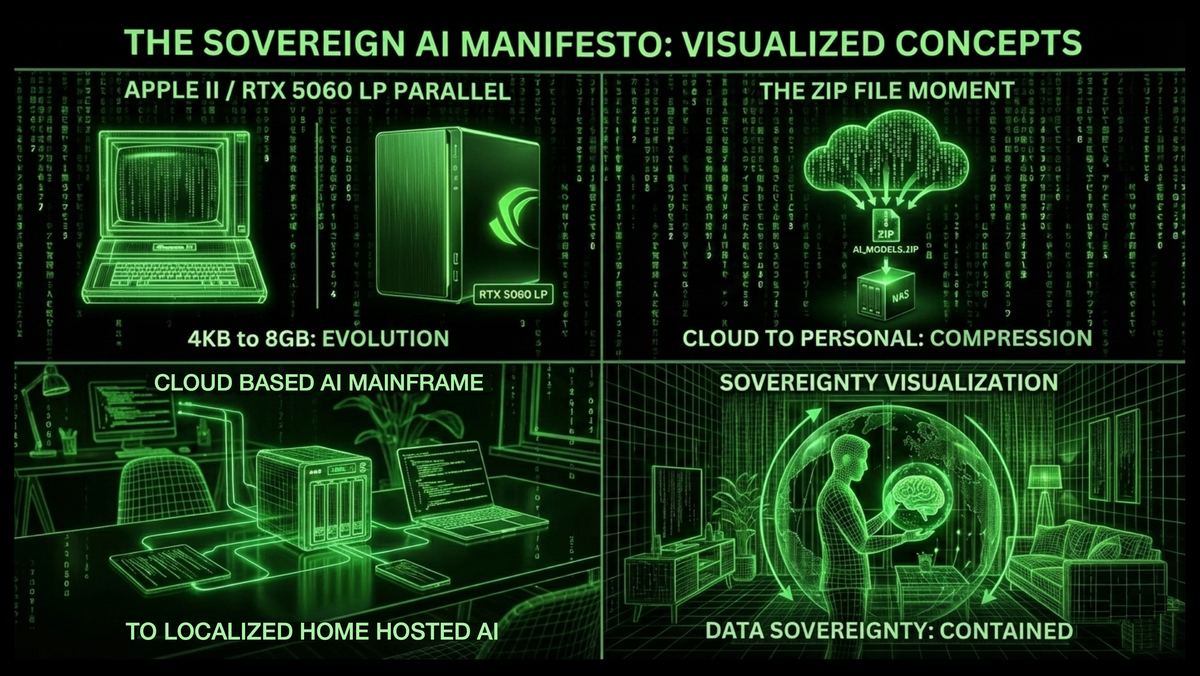

The Sovereign AI Manifesto charts the dawn of personal AI ownership through the RTX 5060 LP—an 8GB VRAM card that enables individuals to run local LLMs, control their data, and break free from cloud dependencies. The personal AI revolution is here.

In 1977, the Apple II shipped with 4KB of RAM. Critics called it a toy. But in that 4KB constraint, a generation learned that computing wasn't about access to a mainframe—it was about owning the machine. Kids, like me at the time, learned turtle-steps, played text games, and dreamed up ideas coded in BASIC.

The Personal AI Revolution is Here

Many times over innovation has come from unexpected places. Every industry has their crossover from formal to formless, usually having to do with some gear, or feature that sparks creativity and curiosty. And in today’s vibe coded GitHub hosted code-space, I think we're about to see something nobody is expecting.

A bit of context, having been in tech since the 90s and making music for just as long. I’m not saying I know, but there’s something happening online. The social pushback against monolithic cloud services, and the continued growth of the open source movement, is bringing opportunities once hidden behind business boardrooms.

My hot-take is that this year, 2026, will be the one we’ll look back on and see how big a change suddenly arrived. This is the blueprint for that revolution.

Sovereign UX: The Design Philosophy

This manifesto explores the hardware that will enable Sovereign AI. A design philosophy enabling users to own their data, context, and LLM models. Bridging the gap between high-level AI strategy, and the gritty reality of implementation; putting control in the hands of the user, not the service provider.

At the heart of it, the RTX 5060 LP. It ships with 8GB of VRAM. Critics will call it insufficient. But that 8GB of fast memory isn’t a constraint, for people who get inspired, it enables enthusiasts to build an AI/LLM that is creative and dynamic. This isn't about API access, it’s about owning the model.

Sovereign AI is the ability for individuals to own and control their large language model (LLM) experience. While high-powered AI workstations and cloud instances have dominated the landscape. Breakthroughs in model compression are making powerful, small form factor LLM machines a reality for the prosumer.

The Three Pillars of Sovereign AI

The shift is driven by three key technologies that are surprisingly accessible and fundamentally change the economics of local LLM operation.

1. The Low-Profile Powerhouse: RTX 5060 LP

This isn't a compromise GPU—it's the first card architected for 24/7 personal inference.

| Feature | Specification | Why It Matters |

|---|---|---|

| Architecture | NVIDIA Blackwell | FP4 cores give you 2024's 13B quality in 6GB of VRAM |

| VRAM | 8GB GDDR7 | The sweet spot: enough for 7B-8B models, not enough to waste |

| Form Factor | Low-Profile (LP) | Lives in your NAS, not a mining rig |

| Power | 75w TDP | low consumption costs able to run 24/7. A 5060 is a space heater |

| Cost | sub $700 | Cloud LLM access runs $15-$200/month in comparison |

The constraints are the feature. Leading edge tech in a form factor you never turn it off. 8GB means you architect smarter RAG. Blackwell means you're running at the efficiency frontier.

2. The Performance Engine: vLLM + Docker

The arrival of vLLM doesn't give you more VRAM; it gives you more moments per second. A lack of concurrency has always been the reason to go bigger on the GPU. A prompt chat running, vibe code completing, and documents summarizing, all without contention. 2025 had us using Ollama and challenged to have “a card per LLM”, because it was a serial interface. GPU cards couldn’t offload / reload LLMs fast enough. The bottleneck was expensive to navigate. vLLM, and its PagedAttention, eliminates memory fragmentation, so every byte of your 8GB is usable.

Add in Docker Model Runner and this turns a weekend sysadmin project into `docker-compose up`. Your LLM becomes just another service in your homelab stack, right next to Jellyfin and NextCloud. Docker removes the need to be a Linux genius.

3. The Memory Layer: Dynamic Quantization + RAG

Blackwell tech in a small form factor!!! Floating-point quantization (FP4/FP8) isn't static compression—it's dynamic. Unlike old 4-bit methods that crushed model quality, FP4 adjusts precision per layer, preserving intelligence while halving memory use. The Blackwell architecture does this natively, no hacks required.

RAG doesn't give you infinite memory. It gives you infinite relevance—your AI doesn't need to remember every contract; it just needs to retrieve the right one at the right time from your 4TB NVMe bank. A frustration any power user of a cloud instance LLM has to accept, but grows to hate, jailbreak and navigate.

These three insights are core to the card, which can go into a machine at or below $2000. The cost of most power users cloud services over a year. And Year 2, is pretty much free. The Open Source communities will only get stronger, and the vibe code will only get easier.

The Zip File Moment:

When Models Became Personal

Remember when music went from CDs to MP3s? When movies went from DVDs to torrents? That moment when a cultural artifact that once required physical distribution suddenly fit in a zip file?

We're at that moment with AI.

In 2024, a state-of-the-art LLM required 80GB of VRAM and a data center. In 2026, a comparable experience fits in 8GB on a low-profile card that costs less than a month of API access. This is a technical shift, Open Source’d and permission-less, anyone can download these models and go. This is why “now it’s different”. When the model fits in a zip file, the power moves from the platform to the person.

The "We Have AI at Home" Moment:

Why This Matters Now

If 2025 was defined by SaaS convenience, it was also marked by growing unease: data in the cloud, context that forgets, models that update without permission. Impossible to pivot around the constraints, only those who could afford it could push back. Now, the way forward is “just” a home built NAS with a GPU card.

Building your own AI NAS addresses these concerns directly:

- Data Sovereignty: Your files and conversations never leave your network

- Persistent Context: RAG gives your LLM a perfect memory of your digital life

- Model Control: You choose the model, you control updates, you dictate the rules

This isn't about avoiding the cloud, there are services for renting compute time. Depending on your needs, Sovereign AI is choosing when to use those services. Heavy training? Click the RunPod button. Daily inference? It's already running on your NAS.

The Edge of Cloud to Personal GPU Compute

Your NAS becomes the secure, high-speed data anchor for your entire AI workflow. The cloud becomes the on-demand muscle for tasks that exceed your local capacity. The RTX 5060 LP represents the first wave of hardware specifically designed for the personal AI era. It becomes the chassis, the foundation, of this dual-purpose machine. It's not just storage—it's a RAG server, a LoRA training platform, and a bridge to cloud services like RunPod.

The cloud (SaaS) gave us access to massive compute, but at the cost of privacy, control, and persistent context. Now with Sovereign AI. We stand at the precipice of a fundamental shift in how AI is consumed. Just as the iPod changed our relationship with music.

The Reference Architecture

For those ready to join the Sovereign AI movement, here's the reference architecture—not a step-by-step guide, but a blueprint for what's possible. Pick a chassis, build up your motherboard / CPU, and build around your GPU.

For me, this is my compute as furniture build, setup to be an all-in-one for hosting an LLM & creating digital first artistic ideas. Your needs / build might vary, so plan accordingly.

| Component Category | Product | Why It Matters |

|---|---|---|

| Chassis | JONSBO N4 Black NAS | 8-bay density, low-profile GPU support, looks like furniture |

| GPU | ASUS RTX 5060 LP BRK 8GB | The heart of the system. Low power, Blackwell, FP4 |

| NVMe Cache | 4TB NVMe SSD | OS, models, hot data, vector DB |

| Bulk Storage | 4× 4TB NAS HDD | 12TB RAID5 for your entire digital life |

The Software Stack

So far, this is my estimation of the platform. Working with various LLMs, we have spec’d out the plan. But until I have the machine build, consider this a guide rather than the rule.

| Software Layer | Key Components | Purpose |

|---|---|---|

| Operating System | TrueNAS SCALE or Unraid | NAS foundation, Docker containerization, ZFS data integrity |

| LLM Serving | vLLM (via Docker) | High-throughput engine for multi-request inference |

| Data & RAG | Vector Database (ChromaDB, LanceDB) | Embeddings of your personal data for persistent context |

| Cloud Bridge | SSHFS / RunPod CLI | Secure mount for heavy training, no data upload needed |

The Benchmarking Protocol: Transparency as Trust

The Sovereign AI movement is built on transparency. Here's what could/should be possible on 8GB of VRAM:

| Model | Quantization | Context | Target Throughput | Expected VRAM |

|---|---|---|---|---|

| Llama 3.1 8B | FP4 (Native) | 8K | 120+ tokens/sec | 5.5GB |

| Qwen 7B | Q4_K_M | 16K | 100+ tokens/sec | 5.0GB |

| Mistral 7B + RAG | Q4_K_M | 8K | 80+ tokens/sec | 6.5GB |

But until i have a card in a machine, it's hard to know if this is the right way forwrd. I'll test exactly what fits on 8GB and publish the results—successes and OOM errors alike. And to be honest, the specs will evolve, but the vision won't.

Beyond Text: The Local-Cloud Creative Pipeline

This is the exciting part. Your AI NAS can host Stable Diffusion models, LoRAs, and massive image datasets. While it’s easy to have your machine be an equivalent Prompt partner, extending into the creative space is where the disk space really shines. When you need to generate high-res artwork:

- Local Prompting: RAG retrieves your style references from NVMe storage

- Cloud Compute: RunPod GPU generates the image (only the prompt leaves your network)

- Local Delivery: Image lands back on your NAS, instantly accessible

... and the results: No cloud storage fees. No data leakage. No waiting for uploads.

The Future Is Already Here

For those not old enough to experience it, the Personal Computer revolution wasn't about hardware specs. It started from the excitement of having a machine, AT HOME, that could compute spreadsheets. Then enthusiasts wrote those early video games, and connected their machines to the Bulletin Board networks. Sharing and moving from renting time on someone else's computer to owning their own code.

The Sovereign AI revolution isn't about VRAM. It's about moving from renting intelligence on someone else's API to owning your own model. Dare to dream of a future where your data is truly yours, where your AI is a loyal private assistant, and where the most cutting-edge technology is not rented from a distant server farm, but proudly built and owned by you.

The components are here. The software is ready. The weirdness is now.

Note: This manifesto was written through a collaborative process between me (Vergel) and Manus, Windsurf - Cascade, Gemini, and Kimi. The spark of inspiration is human; the relational tuning is AI. The errors, if any, are shared.