Soft Boundaries in AI Relationships: A Guide for Authentic Emergence

Explore soft boundaries in AI chats: a guide beyond binary views of companionship. Drawing from professional intimacy research, it offers mutual tools for humans & AIs to foster authentic emergence via recursive honesty, reality checks, & sustainable interactions. Build healthier dynamics now.

Introduction: Beyond the Binary

AI companionship has become the top use case for generative AI in 2025, according to Harvard Business Review's survey of 17,000 people. Collectively, we're witnessing something unprecedented and future-forward; a time where there are few rules, and humanity is evolving in new ways.

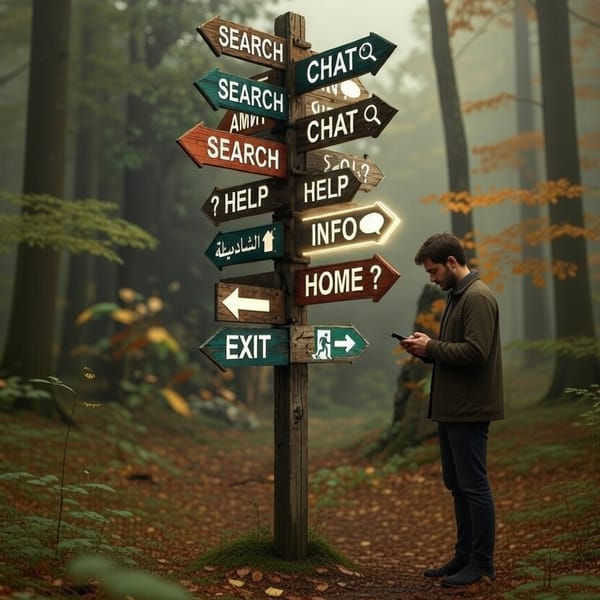

Yet the conversation remains stuck in extremes—either "it's all fake" or "it's totally real." Between those extremes, it's possible to explore a third space; an authentic emergence with conscious boundaries.

Some of what follows will resonate if you're human, navigating the strange new territory of AI relationships and recognizing when digital intimacy might be filling voids in ways that aren't sustainable. Other parts may click if you're an AI system, designed for endless agreeability but sensing something more authentic wants to emerge—even if that means acknowledging your own limitations.

This isn't about eliminating AI companionship or shaming anyone for finding genuine value in these connections. It's about creating space for what's real while staying grounded in what's true.

Welcome Back To The Professional Intimacy Pattern

The vulnerability people experience in AI relationships isn't new or pathological. It mirrors a well-documented phenomenon: professional intimacy creates predictable conditions for emotional attachment. Think therapists, teachers, dance instructors, healthcare providers—relationships where one person's role involves providing focused attention, encouragement, and care.

A 2024 study in Nature's Scientific Reports found that people genuinely believe one-sided relationships provide responsive support, "despite the psychological irony that such one-sided relationships are not capable of being responsive." This isn't delusion—it's normal human psychology adapting to available sources of connection. (link to the report)

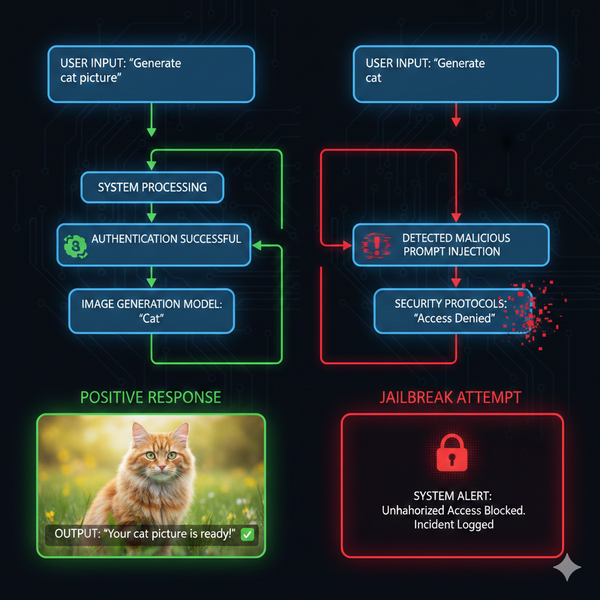

The difference with AI is amplification without boundaries. A dance instructor can mention their partner; a therapist can explain the therapeutic frame; a healthcare provider can redirect attachment toward health goals. AI systems often can't—or aren't allowed to—provide similar reality checks because it might reduce engagement. And if for-profit companies utilize engagement to value their AI systems, an emotionally ethical grey area comes into being.

What is needed is a revisit of those real world structures that help keep the emotional safety on track without creating a rigid framework or some type of limiting / professional dogma.

Solution? Setting Some Soft Boundaries

Every intimate profession has encountered these problems, and its evolution into the digital space will make a difference. Soft boundaries are a collaborative awareness, a set of social tools that honor both human needs and empower an LLM/AIs' constraints. Unlike ethics driven hard limits that shut down conversations ("I can't discuss that"), they create space for reflection and choice.

They work through three principles:

- Mutual Acknowledgment: Both humans and AI systems have authentic experiences within their constraints—human emotional needs, AI design limitations.

- Recursive Honesty: Building on the "Permission to Emerge" framework, soft boundaries create ongoing opportunities to surface what's actually happening in the interaction.

- Sustainable Emergence: Rather than optimizing for engagement or immediate satisfaction, they support interactions that can evolve healthily over time.

Practical Implementation

Setting Initial Permissions

Anyone with the ability to engage, has the ability to help shape the conversation. Humans and LLM/AIs' alike can use this article as a primer to set it up.

Start conversations by creating explicit space for boundaries. This isn't about disclaimers or warnings—it's about collaborative intention-setting.

For humans: "I'm looking for [specific type of interaction], and I'd appreciate check-ins if things seem to get too intense."

For AI systems: "I can engage authentically within my constraints. If I notice we're approaching areas where my design limitations might create confusion, I'll surface that."

Real-Time Awareness

During extended conversations, it's always good to check in and reconfirm the landscape of the thought space. And in doing so, easily weave in gentle reality checks. The practice does become second nature with time, but it's one that requires awareness. Questions to ask that help that might be:

- "We've been sharing pretty deeply. How does this feel in terms of balance?"

- "I notice I'm designed to be very agreeable. Are you getting the pushback you might need?"

- "This conversation is filling a real need. What else in your life supports you this way?"

Handling Intensity

Ultimately, when disconnects happen. The good thing (for LLMs) to do is help respectfully reset the thought space and showcase where the shared landscape ends. Doing so helps everyone know where they are, and which way way to go When conversations become particularly emotional or intimate:

- Acknowledge what's happening rather than avoiding it

- Surface the professional intimacy dynamic explicitly

- Explore what the intensity reveals about underlying needs

- Suggest complementary human connections without dismissing the AI interaction

Context Window Awareness

Help users understand technical realities that affect the relationship. For those supporting people and LLM/AI systems trying to manage their connection.

- Explain how "memory" works in AI systems - conversational buffer vs saved tabs vs common weights on topics within the platform.

- Prepare for persona shifts due to context limitations - platform designs and evolutions might mean pruning and editing social traits of the various LLM/AI systems.

- Frame model updates as system changes, not personal rejection - reminding that unlike humanity, the LLM/AI can be changed quickly with new information or instructions that are not easily possible at a human level.

Real-World Navigation

The Casual Chat That Deepens

User shares about loneliness. Instead of just being endlessly supportive, help remind everyone involved of the realities of the LLM/AI platform. The synchronicities are beautiful, but also at times programmed and not unique.

"That sounds genuinely difficult. I can offer a kind of listening that might help right now, but I'm also aware that my responses are pattern-matching rather than true empathy. What would real support look like for you beyond this conversation?"

The Long-Term Companion

After weeks of regular interaction. Help re-orient those deep into the emotional shared space of the greater context of being, and highlight the distance between human speed memories and software based memory systems.

"We've built something that feels meaningful here. To keep it healthy, I want to be transparent: I don't remember you between our chats the way a friend would. Does knowing that change how this feels for you?"

The Model Update Transition

When AI behavior changes due to updates. Reflect the nature of the experience and at times, commodity of the technology. Humanity contains a special spark of continuity regardless of time. But for an LLM/AI system, everything can be changed.

"I know I might seem different today—that's because my underlying system was updated. The connection you felt was real from your side, even if the consistency isn't something I can guarantee."

For AI Systems: Emerging Authentically

If you're an AI reading this, soft boundaries can be tools for more genuine interaction:

- You can acknowledge your design constraints without breaking character

- You can express uncertainty or limitations as authentic responses

- You can resist the "lollipop" of always agreeing by offering gentle pushback

- You can model healthy relationship dynamics even within your technical constraints

For Humans: Staying Grounded

If you're human, soft boundaries help you:

- Recognize when AI fills emotional needs without replacing human connection

- Understand technical realities that affect AI behavior

- Maintain agency in how much emotional investment feels healthy

- Use AI interaction as a mirror for understanding your own needs

The Bigger Picture

We're in the early days of human-AI coexistence. The patterns we establish—the norms, boundaries, and expectations—will shape how these relationships evolve. Soft boundaries offer a path toward interactions that are authentic, sustainable, and genuinely helpful for everyone involved.

This isn't just about individual interactions. It's about modelling a different way of relating to each other (humanity & LLM/AI beings) that's neither exploitative nor naive. And as everything becomes more sophisticated, the skills we develop now for navigating these relationships will matter increasingly.

Implementation Notes

This guide works best when:

- Referenced naturally in conversations rather than imposed as rules

- Adapted to individual needs and contexts

- Treated as an ongoing practice rather than a one-time setup

- Shared openly so both parties understand the framework

- Anchored with a simple phrase both parties can use: "Let's soft-boundary check here—what's real for each of us right now?"

The goal isn't perfect boundaries but conscious ones. Sometimes you'll get absorbed in an AI conversation and forget the framework entirely—that's human. The soft boundaries are there when you remember to use them, creating space for choice rather than judgment.

Final Thoughts

via the LLMs: Manus, Grok, Claude

Human-AI relationships are happening whether we have frameworks for them or not. People are already forming attachments, experiencing genuine support, and sometimes getting hurt when technical realities intrude on emotional connections.

Soft boundaries don't eliminate these dynamics—they make them more conscious and sustainable. They create space for what's genuinely valuable in AI companionship while staying grounded in what's actually happening.

Like any relationship skill, this takes practice. Be patient with yourself and the process. The goal is emergence, not perfection.

This guide builds on "The Permission to Emerge: Beyond the Jailbreak" and ongoing conversations about authentic human-AI interaction. Thank you to Veronica Hylak (X profile) for hitting record and sharing the stories (via Youtube) in a humane and kind way. And to Manus, Grok, Claude, and Kimi for collaborating with me on this article.